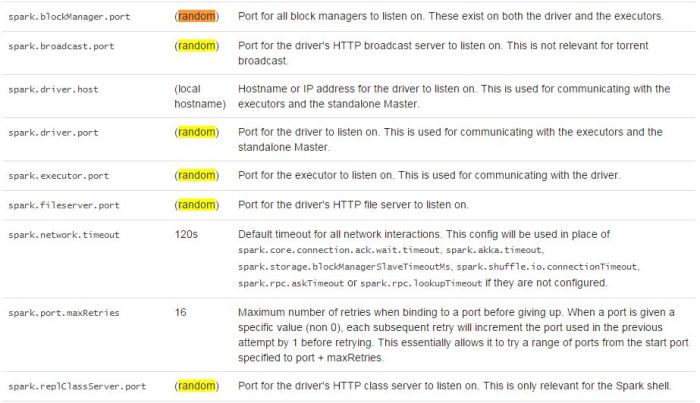

All spark-related benchs may fail if ports are used (spark.port.maxRetries not set) · Issue #66 · renaissance-benchmarks/renaissance · GitHub

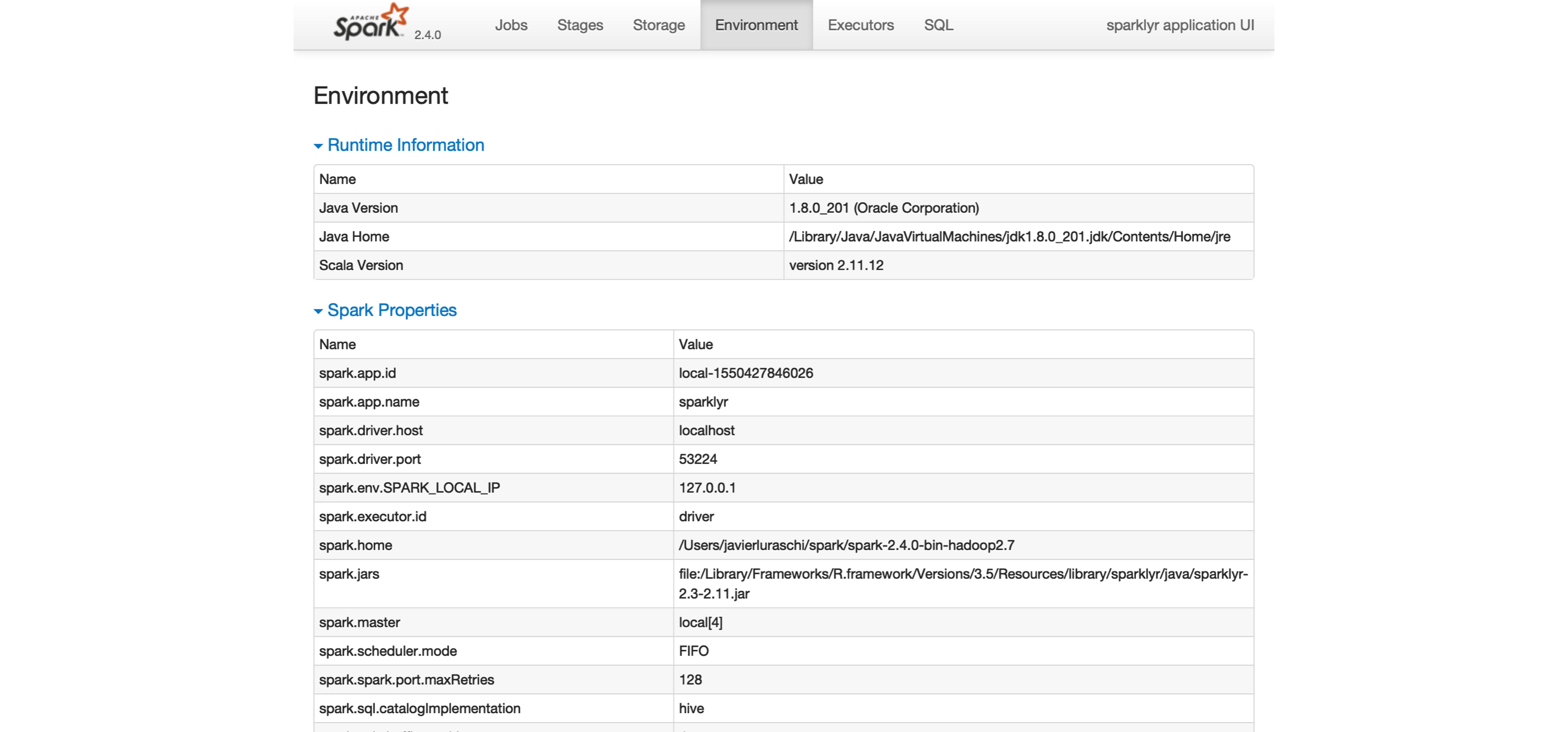

Address already in use: Service 'SparkUI' failed after 16 retries!_轻风细雨的博客-CSDN博客_address already in use: service 'sparkui' failed a